Sign In

Welcome to Bypass AI! Sign in to continue your exploration of our platform with all its exciting features.

Forgot Password?

Don’t have an account ? Sign up

Sign Up

Embrace the Future with Bypass AI! Sign up now and let's rewrite the possibilities together.

You have an account ? Sign In

Enter OTP

We’ll send you an OTP on your registered email address

Back to Login

Forgot Password

We'll Send You An Email To Reset Your Password.

Back to Login

Enter OTP

We'll send you an email to reset your password.

Back to Login

Confirm Password

Please enter your new password.

TABLE OF CONTENTS

Quick Summary

What is Multimodal AI?

How does multimodal AI work?

Multimodal AI Use Cases for Businesses

Multimodal AI Tools in 2026

Multimodal AI vs. Unimodal AI

Popular Multimodal AI Models

Multimodal AI Examples

Benefits of Multimodal AI

Types of Multimodality in AI

Multimodal AI Risks

Components of Multimodal AI Models

Future of Multimodal AI

Case Studies / Success Stories

Conclusion

FAQs

In the last five years, I've personally seen Artificial Intelligence (AI) evolve more than it did in almost two decades. Today, we use everything from simple chatbots to advanced AI models that can even autonomously manage self-driving cars. Businesses use AI chatbots based on their needs. For example, if they need a basic question and answer bot, they use basic chatbots, or if they need an advanced AI that can listen, talk, and even "understand," they use Multimodal AI.

Traditional models use only one form of input, such as text-only chatbots or image-only recognition systems, but multimodal models use multiple senses simultaneously, just like us humans do, where we can process all our senses simultaneously, such as sight, sound, speech, and context.

In this detailed article today, we'll explore multimodal AI, covering how it works, how it helps in real business applications, and its overall benefits and risks, so you can better understand multimodal AI.

Quick Summary

Chatbots are quite useful, but as we know, they follow fixed rules, which prevents them from providing flexible answers. Today's businesses operate across a wide range of sectors, and simple chatbots can't handle their customer base, forcing them to integrate multiple chatbots to address this problem. Businesses are now using multimodal AI to solve these problems.

The main benefits of multimodal AI is that their AI bots can change their roles based on the task and can also utilize multiple senses simultaneously, making them capable of handling a wide range of tasks. This is why companies like IBM are conducting extensive research on multimodal technologies.

Our focus in this article:

- What is multimodal and how does it work?

- What are the current use cases and sectors of multimodal?

- What risks and benefits are we currently seeing in multimodal?

- What is the future of Multimodal?

What is Multimodal AI?

If we understand Multimodal AI in simple terms, it is a type of artificial intelligence system that has the capability to process multiple inputs, that is, different types of data, simultaneously, without any human assistance.

For example: You can understand this to a large extent by using examples. When we humans talk to someone, we use our eyes, our tongue for audio, and our ears for hearing. You can also call Multimodal AI a similar system.

- Unimodal AI can mostly process only one type of data at a time, for example, if your input is text, it will process and respond to that, not in media formats.

- Multimodal AI can read emails, documents, and social media posts simultaneously.

- It can easily analyze images, photos, medical scans, and even product pictures.

- Multimodal chatbots can interpret voices, tones, and even music in audio format.

- It can easily recognize moving visuals and sound; it can easily understand and even search for videos.

- It can easily process sensor data such as IoT signals, LiDAR, GPS, and biometrics.

This is why Multimodal AI is considered contextually richer, more human-like, and highly accurate, which is the main reason why businesses are so interested in it.

How does multimodal AI work?

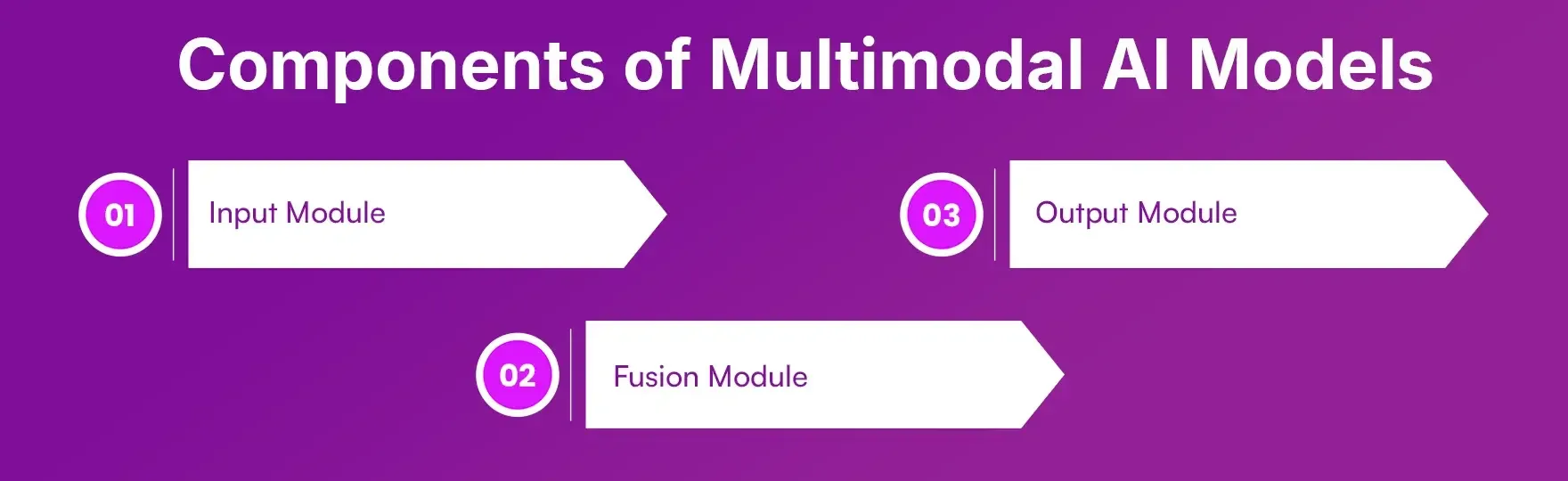

Now that we understand what multimodal AI is, it will be interesting to understand how it works. Let's understand multimodal AI through its three main components.

1. Input Module: First, it uses its sensors to detect and understand the given input, whether it is in the format of text, image, audio, or even video. For this, it uses specialized encoders. For example, a language model not only processes text, but its vision encoder also helps it handle images.

2. Fusion Module: This process performs all the important tasks of a multimodal model. In this, the system fuses and aligns the data. The fusion occurs in some basic steps; let's understand it.

Early Fusion: In this, the raw data is combined before processing.

Intermediate Fusion: This is a kind of partially processed feature that is later merged mid-way.

Late Fusion: Whatever processing has happened in the last, the independent outputs will be combined according to the algorithms in the end.

Mostly self-driving cars rely heavily on late fusion, in which they use data from LiDAR, radar, GPS, and cameras for safe navigation decisions.

3. Output Module: Finally, based on the input, the user gets a context-aware output, this can be a simple text response or an image caption or it can even analyze critical decisions like fraud detection. Being multimodal, it tries to give the most relevant output by using every perspective or context.

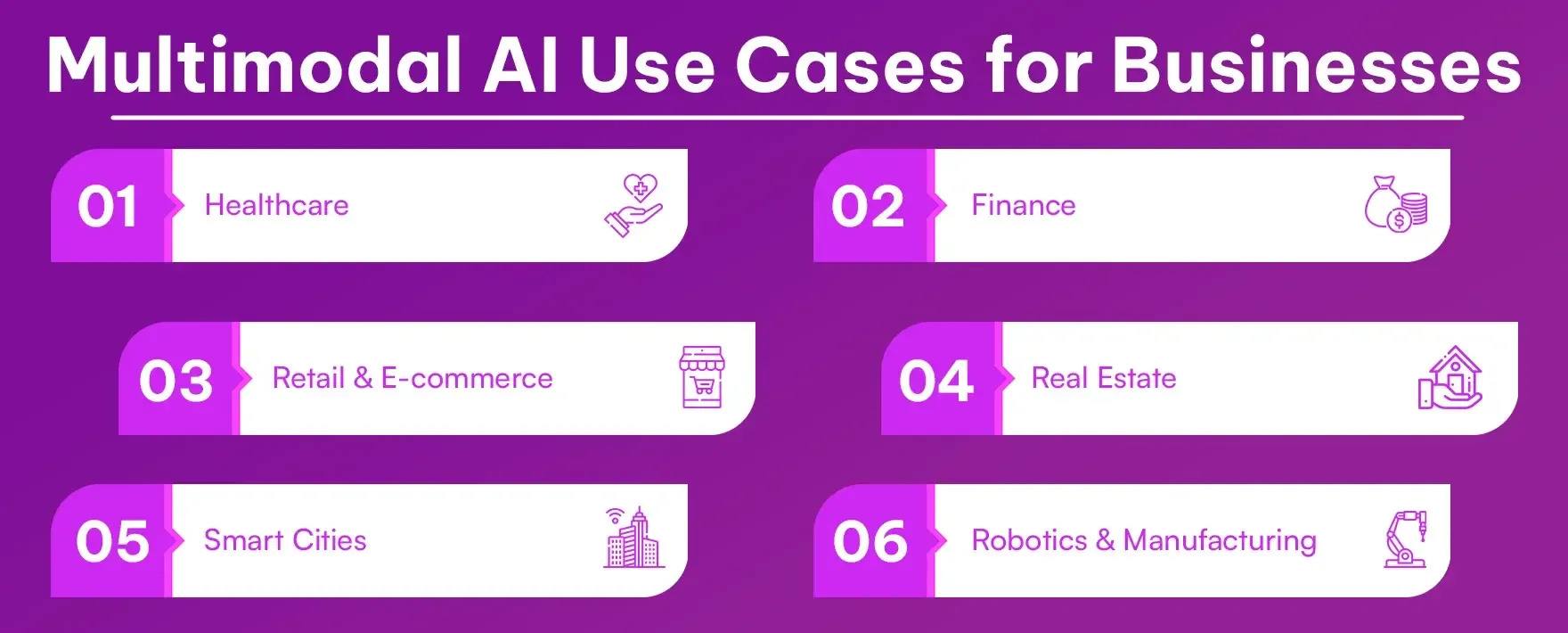

Multimodal AI Use Cases for Businesses

I've seen many businesses curious about how they can use multimodal AI in their business. But first, it's important for businesses to understand what the major use cases for multimodal AI are.

Healthcare: Many healthcare companies use multimodal AI to help diagnose skin-related diseases using patient history and medical images. Stanford's study achieved 87% accuracy.

Finance: Many banks and institutions use multimodal for fraud detection, using transaction logs, market data, and even voice tones. JPMorgan's Coin platform also uses multimodal for the same purpose.

Retail & E-commerce: Most e-commerce platforms, such as Amazon, use AI-powered product recommendations based on user visual and behavioral data, helping the platform drive more sales.

Real Estate: Many companies use multimodal for real estate businesses, allowing them to instantly learn about property valuations. They also use images and purchase history.

Smart Cities: Many global platforms, such as Singapore's "Virtual Singapore," use surveillance cameras, air-quality sensors, and traffic data for citizen reports or urban planning.

Robotics & Manufacturing: Most collaborative robots, such as ABB's YuMi, use cameras, pressure sensors, and position data to safely assemble products.

Multimodal AI Tools in 2026

There are many popular multimodal AI tools available in 2026, used for different purposes, and you may already be familiar with many of them.

Google Gemini: This is Google's AI product that integrates images, text, and other data, making it easy to generate output in text or image formats.

Vertex AI (Google Cloud): Mostly used by businesses with enterprise-ready platforms, it supports multimodal workflows.

OpenAI CLIP: This platform combines text and images for visual search and captioning.

Hugging Face Transformers: This is an open-source model that strongly supports multimodal learning.

Meta ImageBind: This platform processes six modalities simultaneously, including text, image, audio, depth, thermal, and video.

Runway Gen-2: This model is known for its high-quality video generation, allowing you to generate videos by viewing basic text prompts.

DALL·E 3: This platform is mostly used for generating log images, allowing you to use text as an input for image generation.

Inworld AI: This platform is related to the gaming sector and can build intelligent, interactive game characters, and that too quite quickly.

I have personally used the CLIP platform in the past for my visual search projects and was quite surprised by its efficient results as it connected quickly with my e-commerce datasets.

Multimodal AI vs. Unimodal AI

Let us understand multimodal and unimodal in detail through table comparison, this will give you an overall idea of what is the basic difference between the two.

| Feature / Aspect | Unimodal AI | Multimodal AI |

|---|---|---|

| Data Input | It works with a single type of data like text, only image, or only audio. | It can integrate multiple data types like text, images, audio, video, and sensor data simultaneously. |

| Context Awareness | It has limited understanding; misses nuances beyond its single modality. | It provides richer, context-aware insights by combining signals from multiple sources. |

| Output Capability | It generates output in the same modality as input, like text to text. | It can produce outputs in multiple formats for example like in text, image and in video. |

| Application Scope | It is best suited for narrow, specialized tasks for speech-to-text, image recognition. | It can handle complex, real-world scenarios (example, medical diagnostics, autonomous driving, smart assistants). |

| Accuracy & Reliability | It relies on a single data source, which may lead to errors if data is noisy or incomplete. | It cross-validates information across modalities, resulting in higher accuracy and robustness. |

| User Interaction | It Offers basic interactions, usually limited to one input method. | It enables natural, human-like interactions by combining voice, gestures, images, and more. |

| Performance in Complex Environments | It struggles with tasks requiring cross-domain reasoning. | They excel in dynamic, multi-signal environments (healthcare, robotics, finance). |

| Examples | GPT-3 (text-only), traditional speech recognition systems. | GPT-4V (text + image), Google Gemini, Meta ImageBind, Runway Gen-2. |

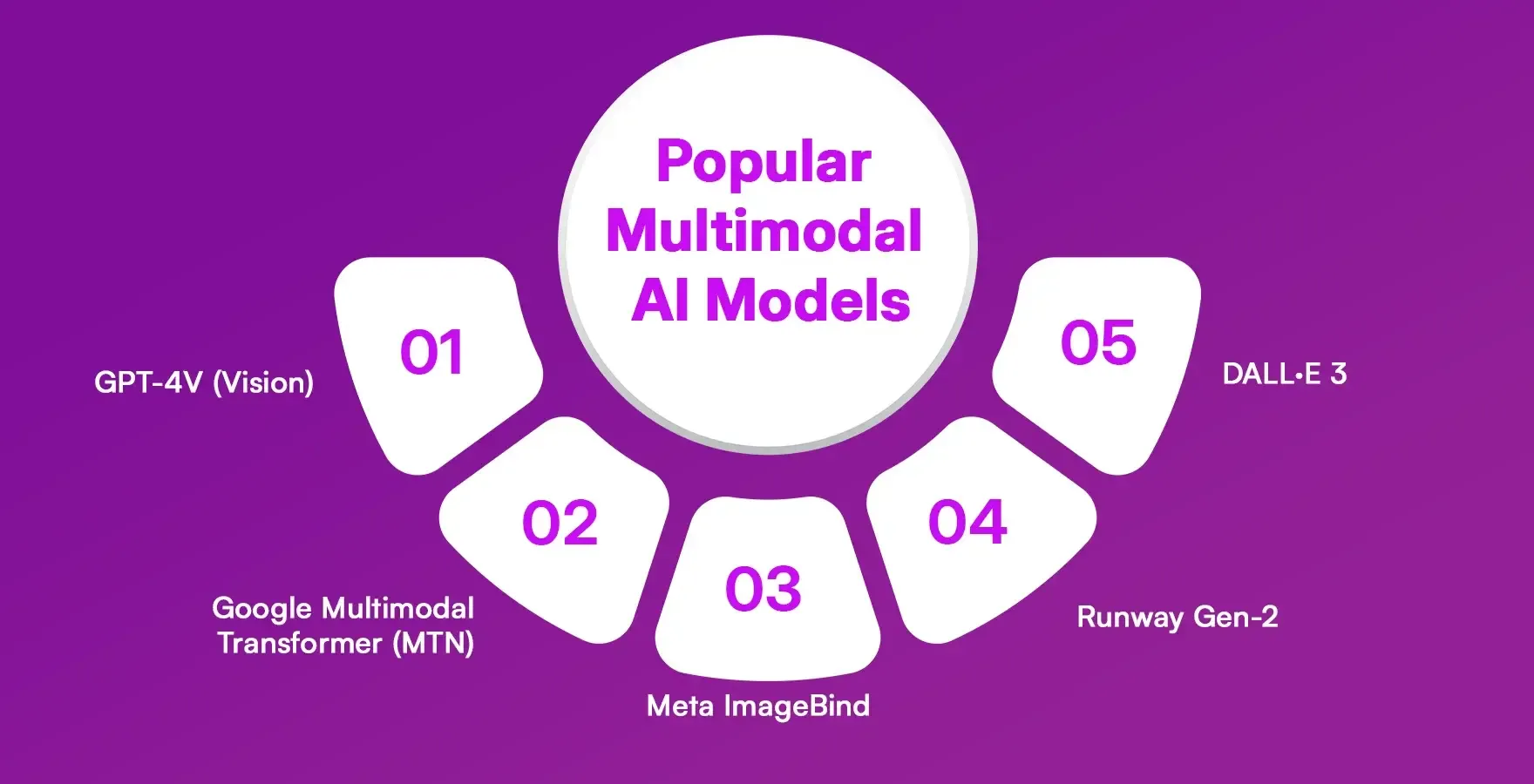

Popular Multimodal AI Models

Let's learn about the most powerful and popular models of multimodal AI. This will give you a good idea of your exposure to multimodal AI.

GPT-4V (Vision): This is a version of ChatGPT by OpenAi that combines text and images for understanding, and this version is becoming quite popular globally.

Google Multimodal Transformer (MTN): This model is used by Google to process audio, text, and images, and Google uses MTN in many of its products, such as Gemini and AI tools.

Meta ImageBind: This platform utilizes six modalities and is used for image generation.

Runway Gen-2: This platform is known for its video generation and the Gen-2 model of this platform can generate very high quality and realistic data.

DALL·E 3: This model is very popular in the image generation sector and DALL·E can generate very realistic images.

Multimodal AI Examples

Let's explore the top examples of multimodal AI.

Virtual assistants that can respond to human voice and gestures can be called multimodal.

Most smart home assistants are multimodal because they can recognize audio, text, or even gestures.

Most robotics used in manufacturing, which have sensors and cameras, are one of the best examples of multimodal AI.

Drones and cameras used for urban planning also fall into the multimodal category because they process a lot of data simultaneously.

Benefits of Multimodal AI

Let's try to understand in detail what benefits you can get if you use multimodal AI.

Multimodal AI provides richer contextual understanding.

Chatbots and systems based on multimodal AI significantly improve accuracy because they make decisions based on multiple factors and signals.

Multimodal AI supports human-like interaction, including high-quality emotion and tone detection.

Multimodal AI is known for its versatility, as it fits across industries and can be used for multiple purposes within a single company.

Advanced multimodal systems enable better decision making in complex environments, as proven by a lot of global research.

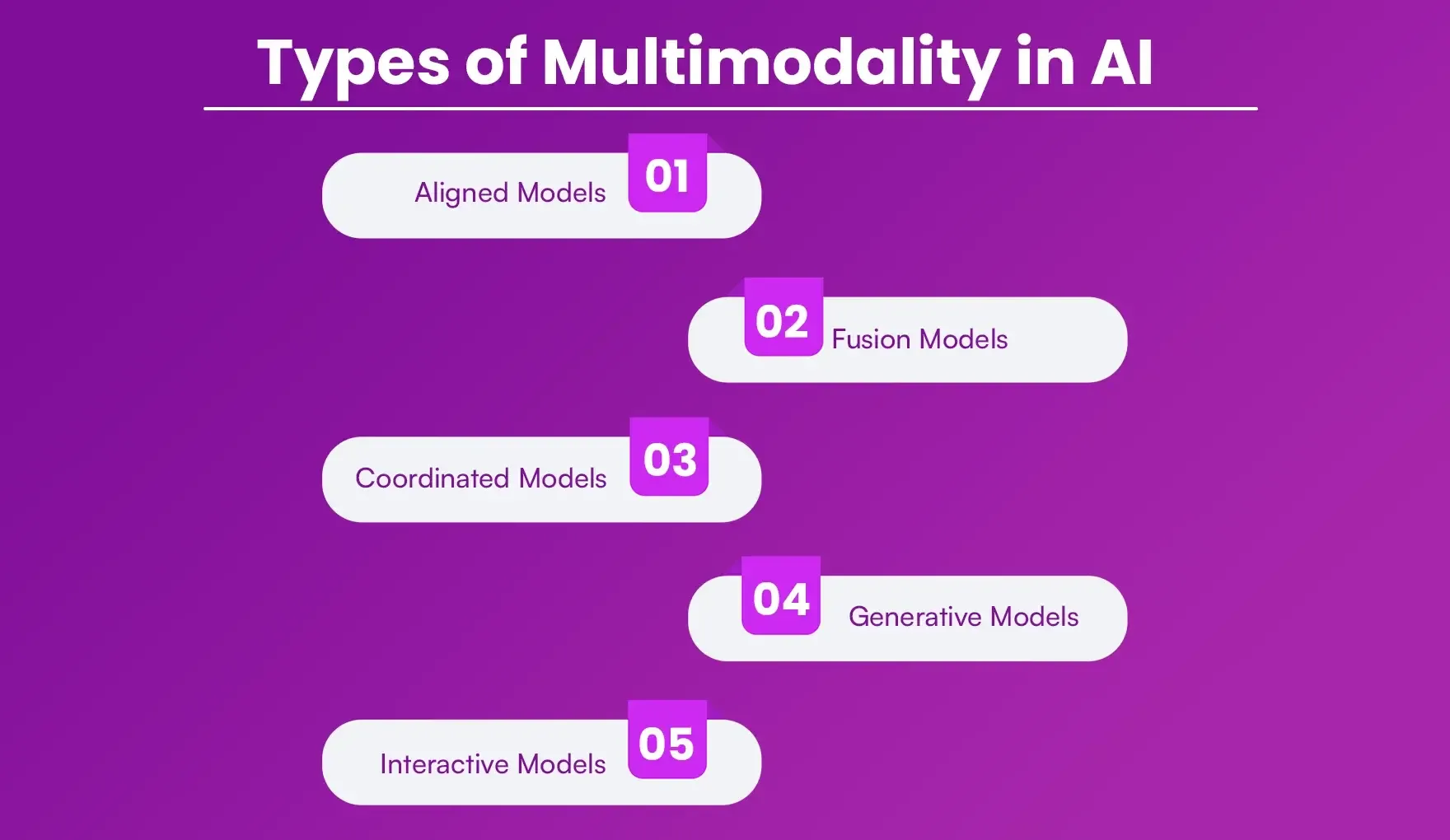

Types of Multimodality in AI

To use multimodality professionally in different sectors, the best type is chosen. Let's learn about the different types of multimodality.

Aligned Models: These models align visual and textual elements to produce image-based output. These models are used for image analysis or captioning.

Fusion Models: These models are used to combine data from different stages, self-driving cars being a prime example.

Coordinated Models: All modalities work separately and are ultimately combined. This is widely used in the banking sector.

Generative Models: Creates new outputs by merging different modalities.

Interactive Models: These models are specifically used for real-time collaboration with humans, best examples being virtual assistants and smart devices.

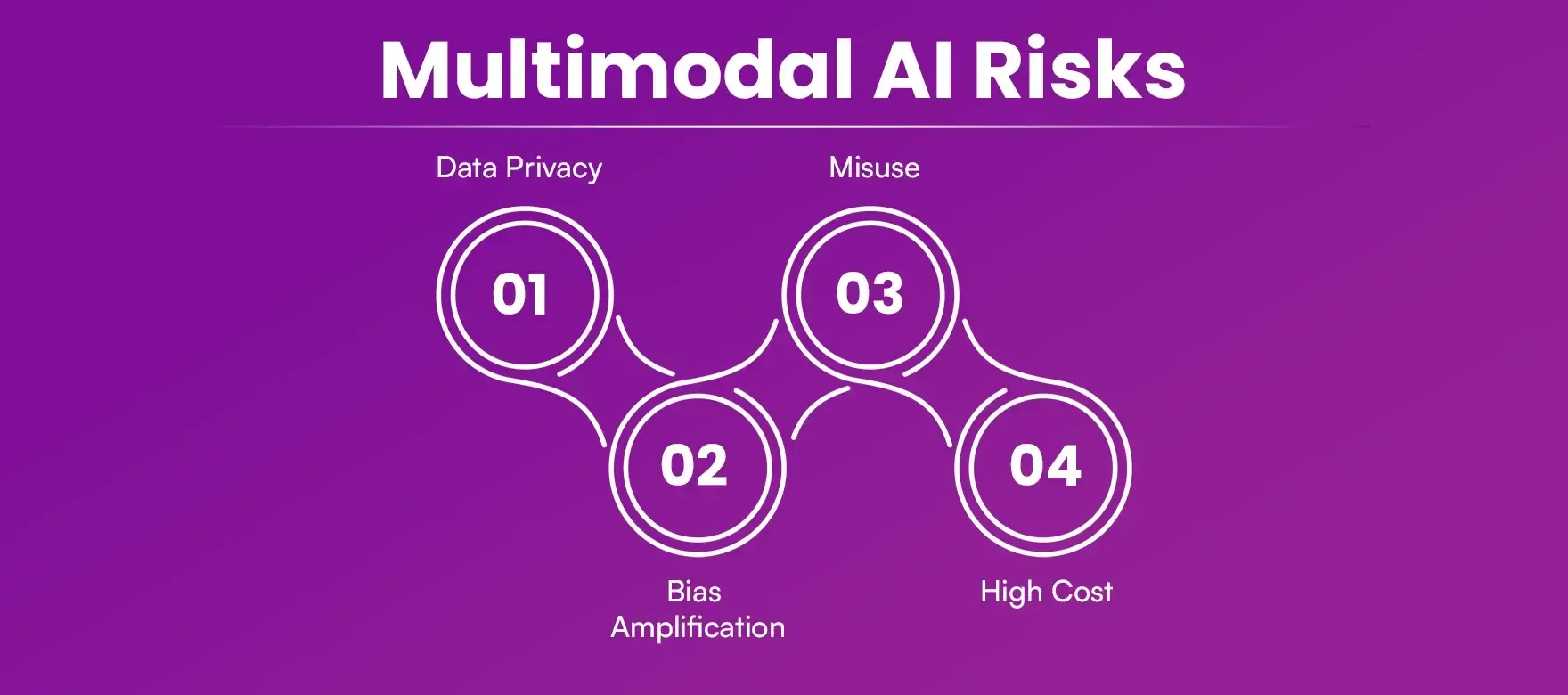

Multimodal AI Risks

As we know, multimodal is an evolving sector, and therefore, it also poses significant risks. Let's understand them in detail.

Data Privacy: Multimodal companies handle massive amounts of personal data, which raises numerous ethical questions regarding data security.

Bias Amplification: If multimodal training data is biased, it will also produce biased results. Therefore, it is crucial that the training data be from large datasets to ensure perfect overall accuracy.

Misuse: In recent years, the risks of deepfakes, surveillance, and disinformation have increased significantly due to multimodal models, which is a concerning issue for many countries.

High Cost: Training multimodal models requires significant computing power, which leads to high training costs and maintenance.

Components of Multimodal AI Models

Currently, multimodal models follow the three-component rule. Let's understand it.

Input Module: It simply encodes text, images, video, audio, and can be called the beginning of the process.

Fusion Module: It combines data from early, mid, or late fusion. It plays a very important role in multimodal processing for contextual awareness.

Output Module: It responds based on model predictions and then generates output based on them. This is the final component of multimodal processing.

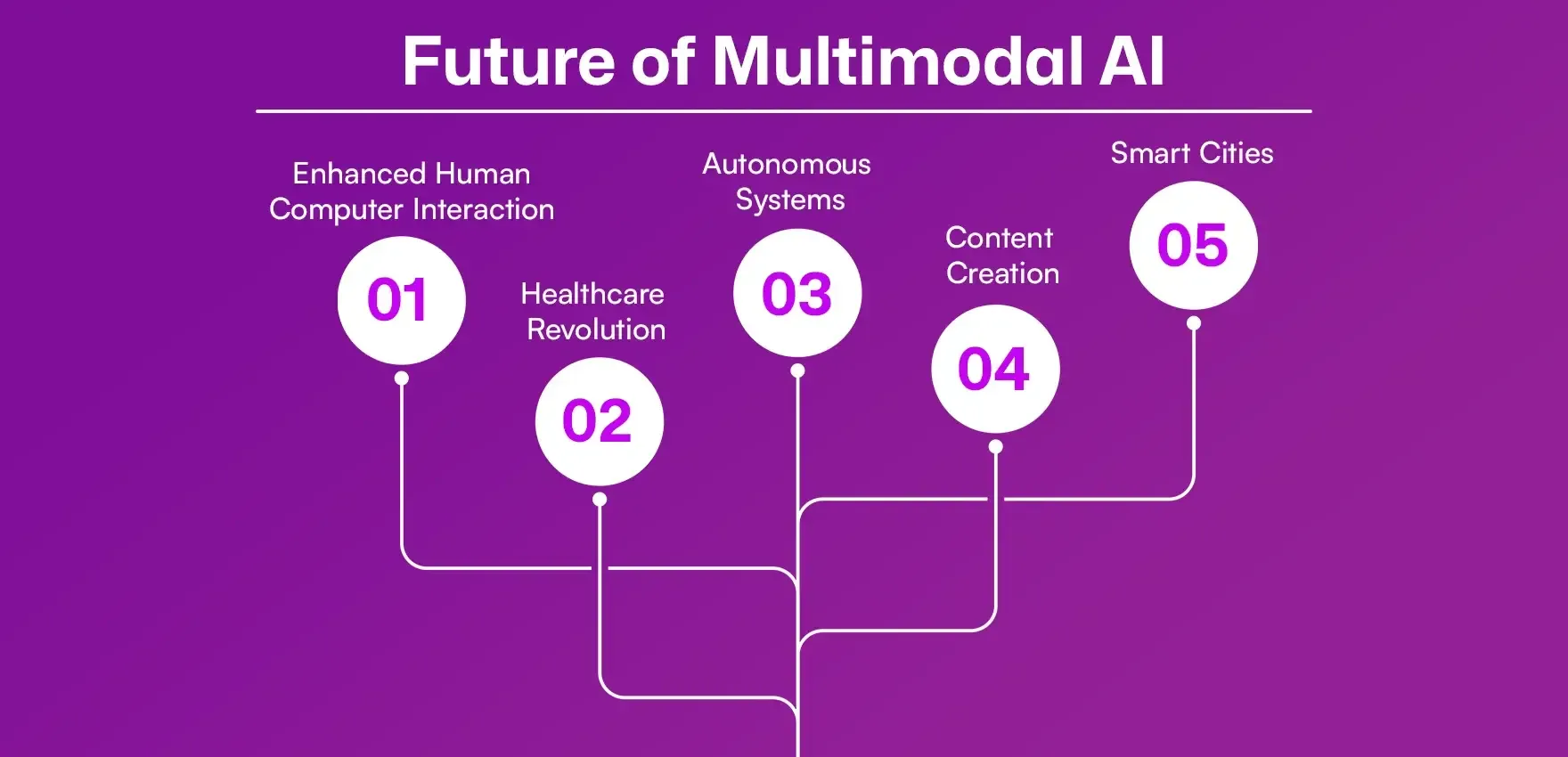

Future of Multimodal AI

Many AI experts say that multimodal AI, Generative AI and AI agents are the future, as they will be used in almost all businesses by 2030, and that too quite extensively. Let's learn about some future trends.

Enhanced Human-Computer Interaction: Future versions of multimodal AI coming next year will provide more natural interactions with chatbots, so you won't even feel like you're talking to a chatbot or a human.

Healthcare Revolution: A lot of medical research is already underway, and multimodality has significantly accelerated the pace of research and testing. This will lead to more personalized medicine in the future, even for specific cities and environments. Furthermore, the speed of disease diagnosis will also increase significantly.

Autonomous Systems: Autonomous systems are increasingly used in robots and self-driving vehicles, enhancing both their intelligence and safety.

Content Creation: Multimodality is also increasingly used in content creation, mostly in media sectors. Furthermore, if you require copywriting in your business, you can use BypassAi.io.

Smart Cities: As we already know that many cities in the world are using multimodals for urban planning, and this helps them in achieving sustainable growth.

Case Studies / Success Stories

If you're considering using Multimodal in your business, these case studies will further motivate you to use it.

Stanford Medicine: Stanford's Medicine department has approved Multimodal for melanoma diagnostics and detection, and according to the medicine department, its overall accuracy is quite excellent.

JPMorgan COIN: JPMorgan COIN, one of America's largest financial institutions, has also approved the use of Multimodal for document review and fraud detection, making the banking sector faster and more secure.

Virtual Singapore: Singapore, one of the world's most organized cities, is also using AI-driven systems for city planning to manage traffic and maintain nature.

ABB YuMi Robot: Many advanced robots, such as the ABB YuMi Robot, are using multimodals for safe collaborative assembly.

Real Estate AI: Many real estate companies in Dubai are using multimodals for automated property valuations based solely on interior images and market dates.

Multimodals are just getting started; in the next few years, global businesses and governments will start using them for various projects.

Conclusion

Multimodal AI isn't just an experiment or a textual concept these days; it's having a significant impact on the present and future, with applications ranging from diagnosing rare diseases to planning smart cities. These autonomous systems are making even virtual assistants context-aware, ushering in a next wave that will significantly support human-AI collaboration.

Over the past year, I've used a number of multimodal AI tools, such as GPT-4V and Runway Gen-2, and I can confidently say that significant developments will occur in the multimodal sector by 2026. And I believe that those who adopt multimodal chatbots early in their businesses will benefit significantly, further boosting efficiency, personalization, and innovation.

Also, if you require copywriting in your business, you might prefer the Bypass Ai platform, which offers all the essential writing tools.

FAQs

1. What is multimodal AI in simple terms?

If we understand multimodal in simple terms, it means a chatbot that can handle different inputs simultaneously, such as understanding an input, processing it simultaneously, or producing output simultaneously.

2. How is multimodal AI different from unimodal AI?

Unimodal AI can process only one piece of data at a time, but multimodal AI can handle multiple inputs simultaneously, giving you flexible and high-quality answers.

3. Which are the most popular multimodal AI models?

If we look at the most popular multimodal AI models today, we will see GPT-4V, DALL·E 3, Google Gemini, Runway Gen-2, Meta ImageBind, and OpenAI CLIP.

4. What are the main benefits of multimodal AI?

Multimodal AI tends to have better accuracy and greater contextual awareness, enabling human-like interactions and decision-making similar to those of a human.

5. Where is multimodal AI used in real life?

In real life, multimodal AI is most widely used in healthcare, finance, real estate, robotics, smart cities, retail, and digital assistants.

6. What risks come with multimodal AI?

Multimodal AI is an evolving sector, and its use also carries risks, such as data privacy concerns, bias in datasets, high costs, and misuse in surveillance or misinformation. Therefore, multimodal AI requires proper monitoring.

7. What is the future of multimodal AI?

I believe that in the future, multimodal AI will support more human-like conversation and interaction and will be used extensively for personalized healthcare, autonomous systems, and immersive AR/VR experiences.