Sign In

Welcome to Bypass AI! Sign in to continue your exploration of our platform with all its exciting features.

Forgot Password?

Don’t have an account ? Sign up

Sign Up

Embrace the Future with Bypass AI! Sign up now and let's rewrite the possibilities together.

You have an account ? Sign In

Enter OTP

We’ll send you an OTP on your registered email address

Back to Login

Forgot Password

We'll Send You An Email To Reset Your Password.

Back to Login

Enter OTP

We'll send you an email to reset your password.

Back to Login

Confirm Password

Please enter your new password.

TABLE OF CONTENTS

What Are AI Detectors and How Do They Work?

What Do We Mean by "False Results"?

Why Detectors Track Patterns, Not True Understanding

Human Writing Can Look Like AI Writing

Why Short Texts and Edited Content Fail Detection

Impact of False Results on Writers and Creators

How to Protect Your Work from False Detection

Best Practices to Avoid False Detection Issues

The Future of AI Detection Technology

Conclusion

In sectors like education, content creation, and digital marketing, the use of AI detection tools has become quite common. Nowadays, these tools are used by teachers to check the work of their pupils, by employers to confirm job applications, and by content platforms to monitor submissions. If you're curious about how your content would perform, you can test it with a free AI content detector to see firsthand how these tools analyze writing.

As a result of their high usage, the precision of these tools is not as good as it is thought to be by a lot of people. Rarely, but more frequently than one would like to believe, the situation occurs when the real human writing is judged as AI-produced and vice versa.

In such cases, mistakes might be made without the users being aware of it, thus resulting in serious consequences for the authors and artists who rely on the accuracy of the tools.

What Are AI Detectors and How Do They Work?

The probability of human-written content is estimated by AI detectors through the application of statistical models that have been trained to spot usual patterns found in AI-generated text. Apart from that, they also analyze sentence structure, word choice, predictability, and language use. To understand the technical details behind how AI detectors analyze text, it's helpful to examine the machine learning algorithms and pattern recognition systems they employ.

The detection tools provide a percentage score depending on how often the text follows the patterns that the model saw during training. However, they do not "understand" the text; they only apply probability calculations concerning the mathematical patterns, and this is where the issues start.

What Do We Mean by "False Results"?

A false positive is when the text written by a human is mistakenly marked as AI-generated. For instance, an original essay of a student might be considered as 80% AI-written just because it follows the formal academic conventions.

On the other hand, a false negative allows AI-generated content to go by unnoticed, most of the time, because it has been modified a little bit or it uses unexpected patterns. Both situations weaken the reliability of these detection systems. For a deeper dive into this phenomenon, read more about understanding false positives in AI detection and their real-world implications.

Why Detectors Track Patterns, Not True Understanding

Detecting AI content is based on the identification of repeated patterns that AI-generated text typically has, for instance, the use of certain transition words, specific sentence lengths, certain structures of phrases, and vocabulary choices that the AI models like.

The issue is that humans also apply these patterns, especially in professional and academic contexts. Business reports, research papers, and technical documentation are likely to have structured formats with consistent terminology and formal language.

Detectors cannot differentiate between a human who follows professional writing conventions and an AI that imitates those same conventions. Both create similar patterns, thus making it nearly impossible to draw accurate conclusions based solely on text analysis.

This inherent weakness implies that, regardless of the source, detectors will always find it difficult to deal with the content that is well-written and formal. Research shows that AI detector accuracy varies significantly depending on writing style, content type, and the specific tool being used.

Human Writing Can Look Like AI Writing

Certain writing styles are more likely to produce false positives than others, and this is especially true for SEO-optimized content with keyword placement that is planned and structured, which often resembles patterns of AI.

Non-native English speakers sometimes simplify their grammar and use repetitive sentence constructions to make sure their writing is clear. This method, although considerate, can still seem like it was created by an algorithm for detectors.

Academic writing follows strict rules of formal tone, certain citation formats, technical vocabulary, and logical development. These rules make the output look like it is coming from an AI academic writer.

Professional communication makes clarity and conciseness the top priorities. The simple writing style that is recommended in contexts of business and that is considered the clearest, ironically, often triggers.

Writers of clear and well-organized content should not be penalized, but the prevailing detection technology is still often equating the quality of writing with that of an artificial one.

Why Short Texts and Edited Content Fail Detection

With shorter text samples, detection precision drops considerably. The mismatch of the ratio between a 100-word paragraph and a 1,000-word essay variation makes their analysis a reliable one, but difficult.

Very little content, by its nature, can provide the detractors enough grammatical markers to make a confident judgment. However, such a situation gets worse because these tools still give out scores, thus misleading the users into thinking that the results are reliable.

Content that has been edited heavily presents another challenge. When writers have the drafts of their papers revised, including sentence restructuring, word replacing, and paragraph reorganizing, they disturb the uniform patterns that are detectors' expectation of finding.

Detectors may find texts, which are the result of good editing practices to improve clarity and flow, paradoxically inaccurate because of their lower detection accuracy. The very process of writing refinement might result in different detection outputs that are inconsistent across the platforms.

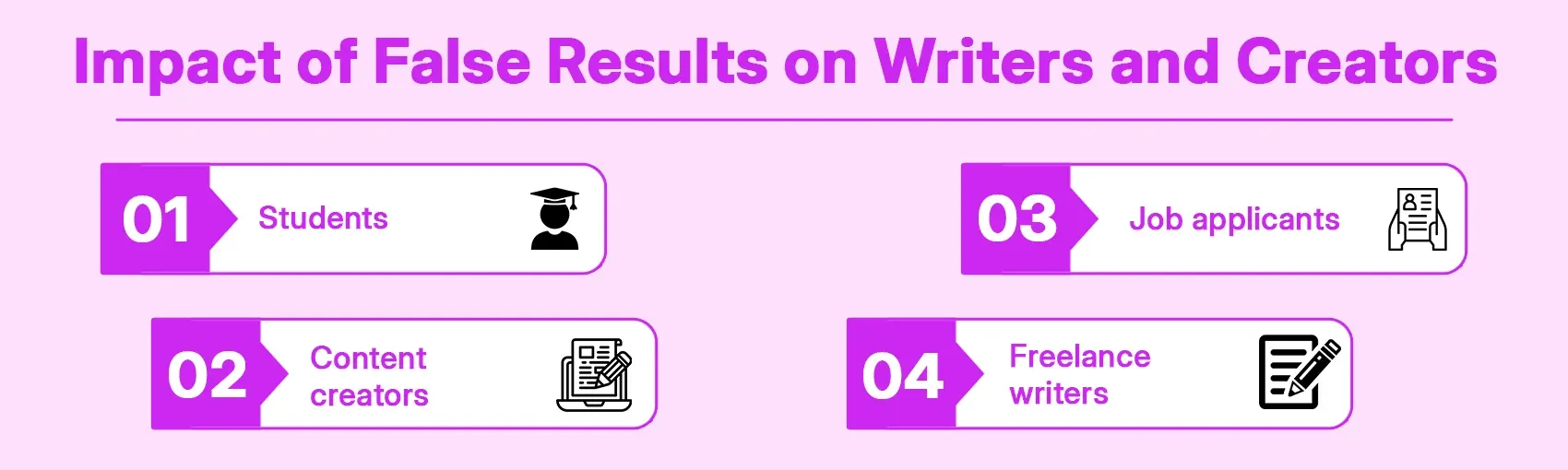

Impact of False Results on Writers and Creators

These real-world consequences make it very clear why detection accuracy is a matter of theoretical concern only when people's education, career, and reputation are at stake.

Students

Students may be charged with academic dishonesty, which not only affects their academic records but also their future job prospects. A charge of cheating wrongly applied to a student causes psychological suffering and may result in that student receiving a failing mark or even being dismissed, all for work that he or she actually produced.

Content creators

Content creators are considered untrustworthy by the platforms when their original posts are marked as not original. Some of them are even demonetized, or their accounts are suspended, which has a direct negative impact on their source of income.

Job applicants

Job applicants are filtered out instantly when the cover letters or writing samples they submit set off the detection tools that are a part of the hiring process. They get no human review at all, nor do they get the opportunity to contest the authenticity of their work.

Freelance writers

Freelance writers face challenges with clients and have issues with payment when the detection scores of their deliverables are the only basis for questioning, even though they have fulfilled all creative requirements.

At the same time, if errors are to be made, the best scenario is that of being negative because at least plagiarism will not get through, while those who have used AI inappropriately will have their hands washed, and those honest writers will be subjected to scrutiny.

Are AI Detectors Reliable Enough Today?

Modern AI detectors are not to be taken as ultimate judges but rather as complementary aids. They can, with a fair amount of confidence, spot very clear AI-derived patterns in extended text excerpts but have a hard time dealing with subtle cases and high-quality writing.

The accuracy of detection fluctuates highly between different AIs, content length, subject, and writing style. Not a single detector possesses the ability to produce consistent results in all scenarios.

There is a continuous improvement in technology, along with AI writing tools. This leads to a perpetual arms race where the power of detection is always lagging behind the power of generation.

For major choices, academic integrity disputes, employee selection, and content authenticity checking, automated detection gives only little support. They should act as a guide to human judgment, and not as a substitute.

Depending on detection scores as clear proofs disregards the uncertain aspect of these tools, which are also the most significant error margins they come with.

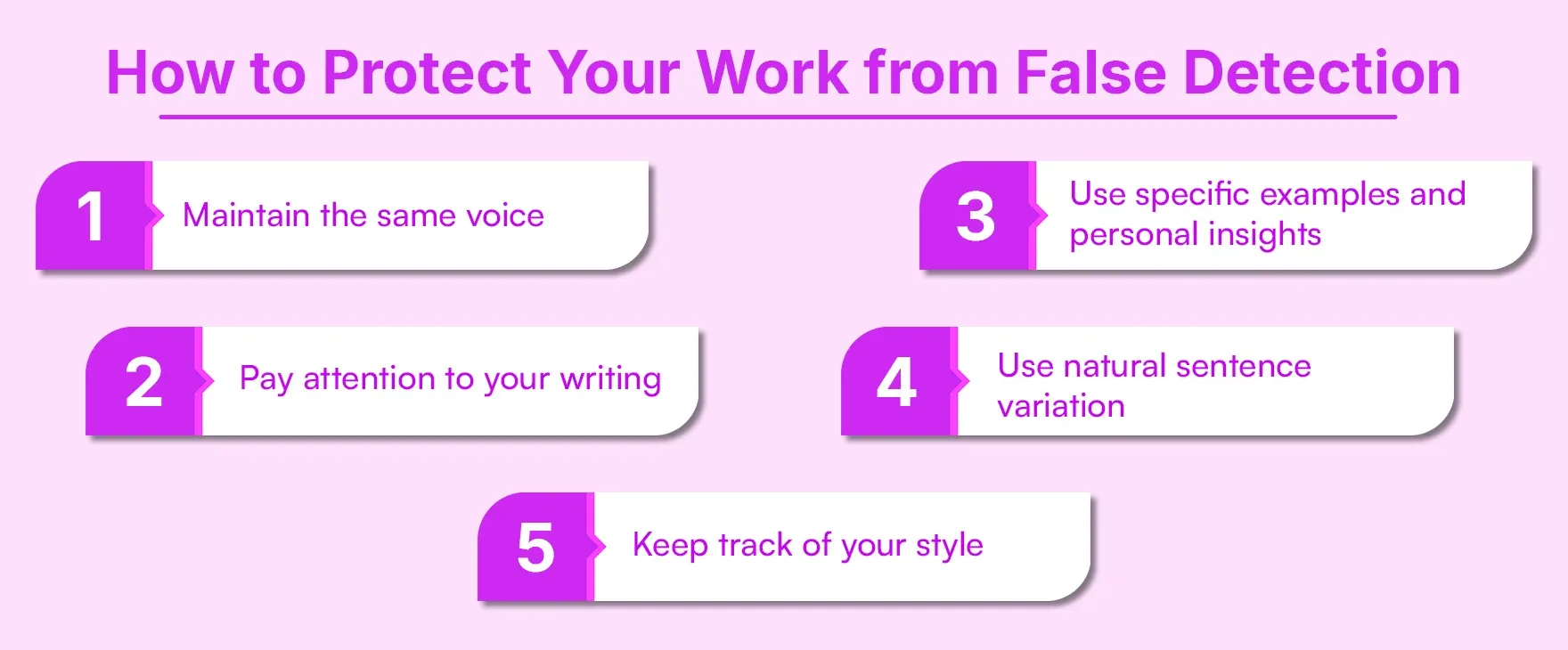

How to Protect Your Work from False Detection

You may not be able to completely avoid false positives, but certain practices will at least lessen the chances. Understanding the mechanics of detection can help whether you're protecting authentic human writing or learning about humanizing AI-generated content to make it sound more natural.

Maintain the same voice

Write in that style that comes most natural to you and won't lead detection machines on. The combination of your own outlook, life experiences, and thought patterns is the strongest weapon against being falsely accused.

Pay attention to your writing

Keep drafts, outlines, and research notes. This documentation shows how your writing has developed and can be used to claim ownership if the matter goes to court.

Use specific examples and personal insights

AI tends to generate confusing content. Your writing will be made unique through the use of in-depth stories, original critiques, and personal views.

Use natural sentence variation

You can do this by mixing short with long sentences; using different transition words; and having your own rhythm guide your writing rather than sticking to the mechanical patterns.

Keep track of your style

Possession of a portfolio containing authenticated human-written samples serves to compare whether your authorship is doubted.

While these methods won't totally get rid of false positive risk, they do support your case if you ever need to prove the authenticity of your work.

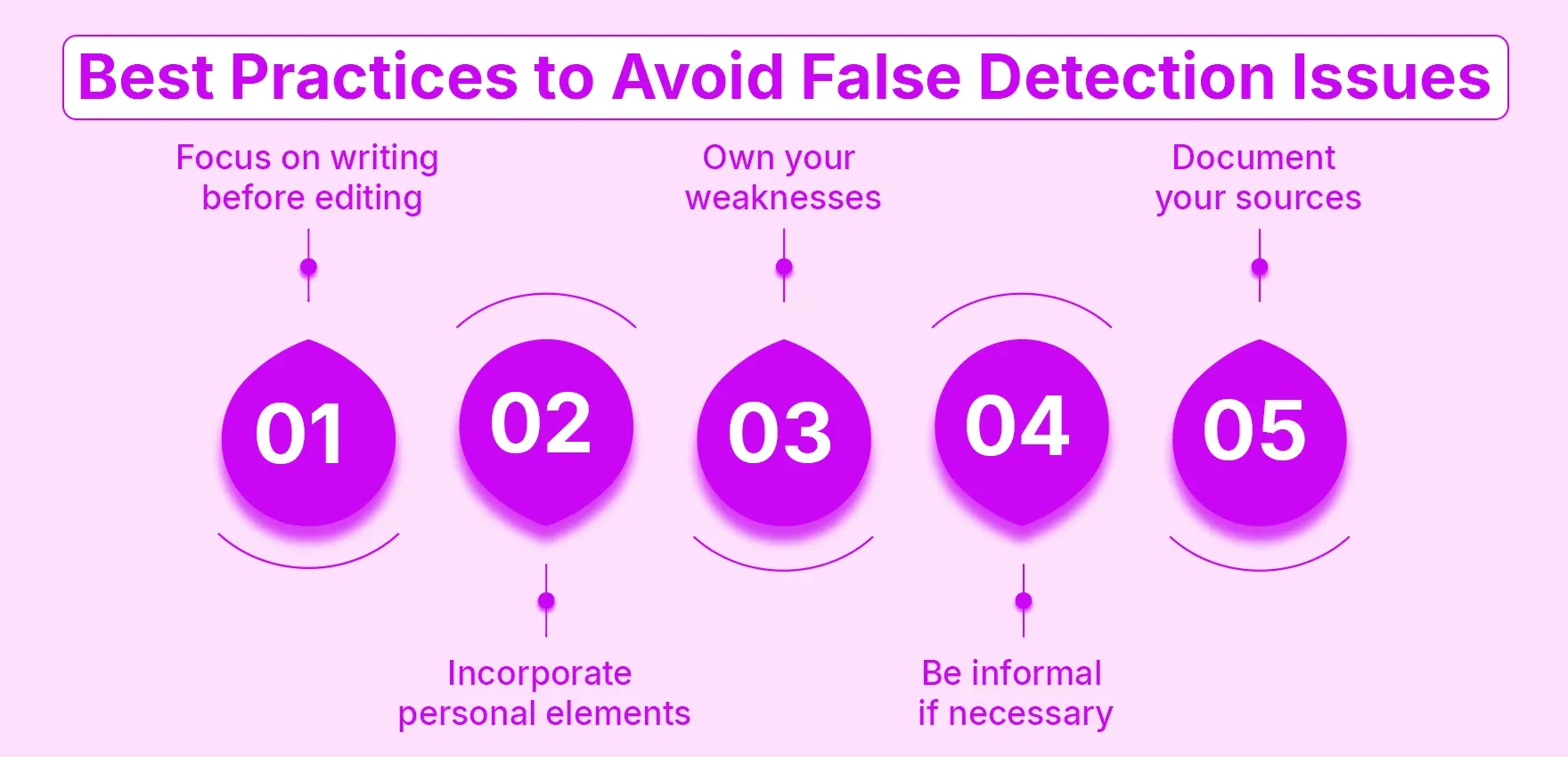

Best Practices to Avoid False Detection Issues

In addition to protective measures, the incorporation of smart writing practices contributes to the preservation of authenticity.

Focus on writing before editing

Concentrate on the clarity of your ideas rather than on the detection. The authentic writing is the one that is composed of the writer's thoughts, rather than one that avoids the algorithms.

Incorporate personal elements

Speak your mind, tell your reactions, and give your interpretations. Offer experiences or observations that are unique to you and could be provided by no one else.

Own your weaknesses

There is no such thing as perfect writing, and nobody can claim that they are writers. The small peculiarities, the occasional use of informal language, and the natural variations are better indicators of authenticity than perfect writing.

Be informal if necessary

According to your situation, permitting a little informality, such as using contractions, posing questions to the audience, and using casual transitions, can greatly help in the realization of the human voice.

Document your sources

The engagement in thorough research that is supported by proper citations is a strong indication of scholarly activity and not of the content being generated.

Above all, write with honesty. Do not treat these practices as a cover-up for AI-generated content. The aim is to safeguard legitimate work; thus, no deception should be permitted.

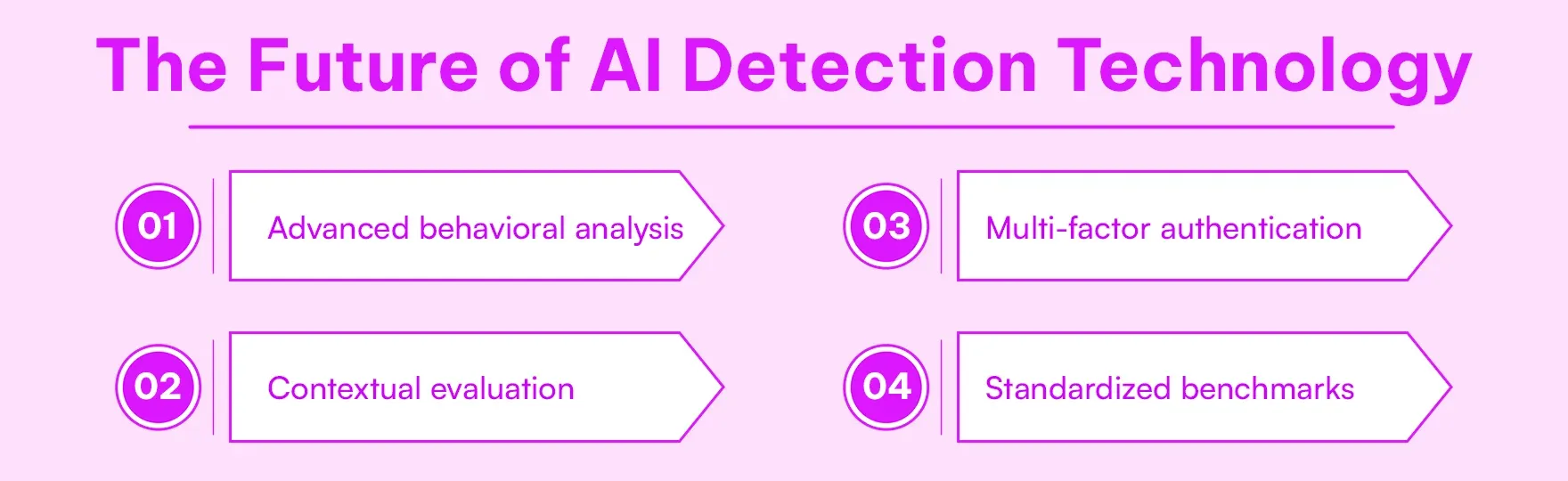

The Future of AI Detection Technology

AI detection is rapidly growing, and also helps to identify content that is generated by artificial intelligence that comes with greater accuracy. Some of these techniques, which become more complicated and widely used across industries.

Advanced behavioral analysis

may examine writing process metadata, typing patterns, revision history, and time spent rather than just the final text. This approach could provide stronger authenticity indicators.

Contextual evaluation

might consider individual writing history and style baselines. If your current work matches your established patterns, detection confidence increases regardless of AI-like features.

Multi-factor authentication

could combine pattern detection with other verification methods, such as interviews about content, revision evidence, or subject matter expertise demonstration.

Standardized benchmarks

Some across platforms would help by creating consistent evaluation criteria. Currently, each tool uses different thresholds and training data, producing contradictory results.

However, as detection improves, so do AI writing capabilities. This technological arms race suggests that human oversight will remain essential regardless of technical advances. As writers and content creators navigate this landscape, many are exploring the best undetectable AI tools to understand both sides of this evolving technology.

The future likely involves hybrid systems where technology flags potential issues but humans make final determinations based on comprehensive evidence.

Conclusion

AI detectors cover many purposes of content integrity, but they have significant limitations that users must understand. Some tools provide the probability that estimates do not provide definitive proof and carry a substantial error margin.

False positives are inherited from an innocent writer who covers false negatives that allow dishonesty to continue, revealing the current technology for high-stakes decisions.

Tools are emerging that improve reliability and streamline content verification, making detection smarter and more trustworthy than ever. For those looking to ensure their content passes detection while maintaining quality, learning how to humanize AI text effectively has become an essential skill in today's digital landscape.

Many educators, employers, and content platforms must treat detection scores as preliminary indicators that require further gaming detection systems.

1. Can AI detectors be 100% accurate?

Yes, some of their functionality is based on probabilities and matching the patterns, rather than certainties. Even the most accurate detectors sometimes fail because the human and AI texts have very similar patterns in their writing.

2. Why does my original work get flagged as AI-generated?

Your writing might follow formal patterns, use clear structure, or be well-organized traits that AI also exhibits. Professional and academic writing styles are especially prone to false positives.

3. Do all AI detectors give the same results?

No, different detectors often give completely different scores for the same text. Each tool uses different algorithms and training data, leading to inconsistent results across platforms.

4. Can editing my work trigger AI detection?

Yes, heavy editing can actually increase detection scores. When you restructure sentences and polish your writing, it may create patterns that confuse detectors and produce unreliable results.

5. Are short texts harder to detect accurately?

Absolutely. Short paragraphs or brief content don't provide enough data for reliable analysis. Detectors need substantial text to identify consistent patterns, making short-form content particularly prone to errors.

6. Should I trust a 95% AI detection score?

Not entirely. High percentages represent statistical probability, not proof. A 95% score means the text matches AI patterns, but it doesn't guarantee the content was actually AI-written.

7. Can I avoid false detection by changing my writing style?

Don't change your natural style to game the system. Instead, maintain authenticity by adding personal insights, specific examples, and unique perspectives that clearly demonstrate human thought and experience.

8. What should I do if my work is falsely flagged?

Provide evidence of your writing process drafts, outlines, research notes, and revision history. Request human review rather than accepting automated results, and calmly explain your authorship with supporting documentation.

9. Will AI detection technology improve in the future?

Detection will improve, but so will AI writing tools. This ongoing race means perfect detection may never exist. Future systems will likely combine technology with human judgment for better accuracy.

10. Are AI detectors unfair to non-native English speakers?

Unfortunately, yes. Non-native speakers often use simplified grammar and repetitive structures for clarity, which detectors frequently mistake for AI patterns. This creates an unfair bias against multilingual writers.