Sign In

Welcome to Bypass AI! Sign in to continue your exploration of our platform with all its exciting features.

Forgot Password?

Don’t have an account ? Sign up

Sign Up

Embrace the Future with Bypass AI! Sign up now and let's rewrite the possibilities together.

You have an account ? Sign In

Enter OTP

We’ll send you an OTP on your registered email address

Back to Login

Forgot Password

We'll Send You An Email To Reset Your Password.

Back to Login

Enter OTP

We'll send you an email to reset your password.

Back to Login

Confirm Password

Please enter your new password.

TABLE OF CONTENTS

Key Takeaways

What Is an AI Detection Score?

How Do AI Detection Tools Work?

Interpreting AI Detection Scores

Limitations & Bias in AI Detection

Common Use Cases & Their Implications

Best Practices for Using AI Detection Scores

What is a "Good" or "Acceptable" Score?

The Future of AI Detection

How Accurate Are AI Detection Scores? A Closer Look at Metrics and Methodologies

Integrating AI Detection in Institutional Workflows

AI Detection vs. Plagiarism Detection: Key Differences and Why It Matters

Conclusion

FAQs

Since the dawn of AI-generated texts by initiatives such as ChatGPT, Claude, and Gemini, it has been essential to understand AI detection scores in education, content creation, business, and policymaking. An AI detection score is a percentage rating indicating the likelihood of the AI model generating a particular piece of text. But what does that really mean? And how do you interpret and apply the score? The following is a full explanation.

Key Takeaways

Score = confidence, not composition ratio. The tools look at linguistic patterns: token frequency, perplexity, burstiness, and classifier outputs. False positives, bias, and evasion: these are real challenges, especially for non-native speakers. Use detection as one of many components in the toolkit, rather than absolute proof. Transparency, fairness, and continuous adaptation should be applied as creators and as evaluators.

Based on a study of what the AI detection score means and what it doesn't mean—the responsible approach toward integration into AI-created content lies in ensuring authenticity in your work.

What Is an AI Detection Score?

An AI-detection score is a numeric value (usually a percentage or a real number between 0 and 1) that estimates a guideline for the likelihood of a text being generated by AI. Just for instance:

An execution of a 40% AI score doesn't mean that 40% was produced by the AI. The tool has 40% confidence that the text, in its entirety, was generated by AI.

These tools do not hatch token-by-token attributions but rather look at more general patterns such as phrase repetition, uniformity in structure, and statistical oddities.

How Do AI Detection Tools Work?

Some of these include the uses of linguistics and machine learning:

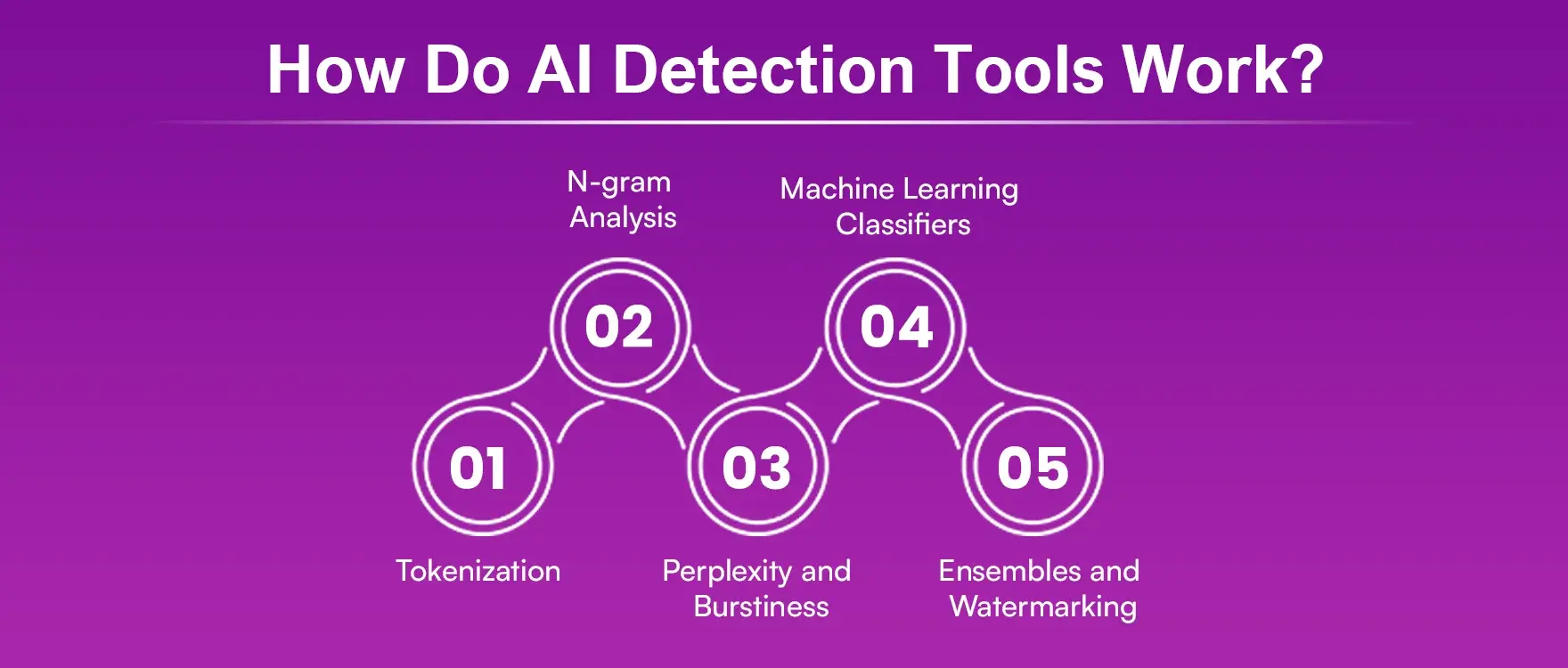

1. Tokenization: It is the splitting of text into units, be they words, sub-words, or characters, with a view to analyzing their components.

2. N-gram Analysis: It refers to the search for an occurrence of any specified string of tokens in a text. AI-type texts develop through the repetition of n-grams.

3. Perplexity and Burstiness: They refer to sentence unpredictability and diversity in form. AI-produced content shows typically very low levels of burstiness and perplexity.

4. Machine Learning Classifiers: Using as probabilistic score outputs the models trained on known human vs human text datasets.

5. Ensembles and Watermarking: Multiple models might be involved, or some hidden watermarks are embedded to cross-verify the content.

Interpreting AI Detection Scores

The further away you go from the level of threshold, the more pressing the difference from tools begins to rule.

1. Turnitin

It often suppresses low scores (<20%) so as to maintain a legitimate emphasis on false-positive cases. In such cases, a pure percentage value is the estimate presented.

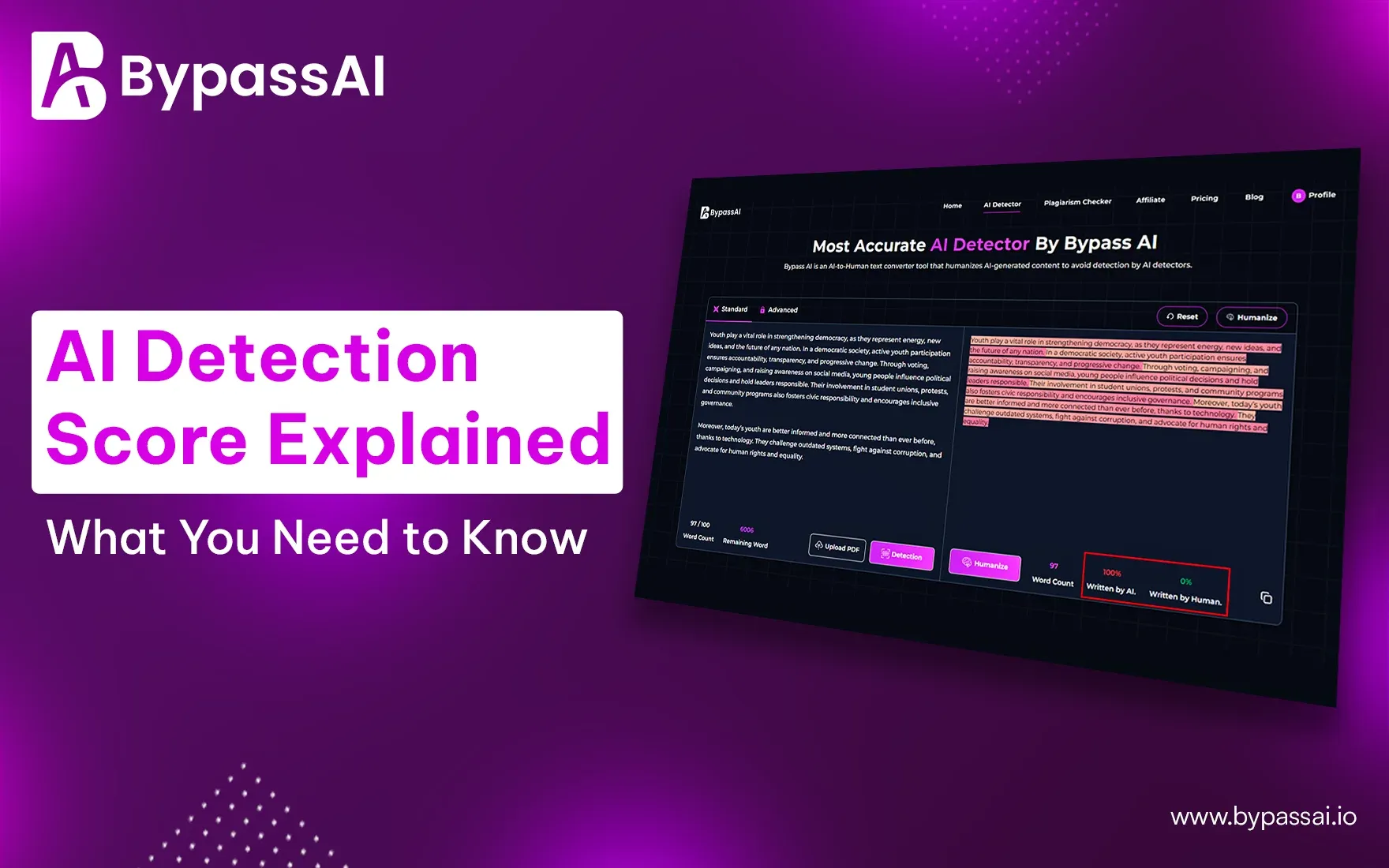

2. Bypassai.io

It has different models of "Lite" (98% accuracy, <1% false positives) and "Turbo" (>99% accuracy).

3. False Positives & Negatives

False positives occur against classically human text, even for texts like the U.S. Constitution.

Such studies have found that many detectors suffer: a general average below 80%, along with a huge bias against non-native speakers.

Also read this article : How Do AI Detectors Work?

Limitations & Bias in AI Detection

Here is the following:

1. Unreasible Flagging

Many detectors produce false positives or negatives. For example, GPTZero and Turnitin have been shown to misclassify substantial text samples.

2. Non-Native Language Bias

Non-native English writers-however they may be called-have disproportionately been flagged.

3. Adversarial Techniques

Paraphrasing and injecting human edits into an otherwise synthesized document greatly diminishes detection possibilities.

4. The Evolution of AI

As new models are released (such as GPT-4 and Bard), one must update their detectors continually.

Common Use Cases & Their Implications

Here are some common use cases and their implication, which are as follows:

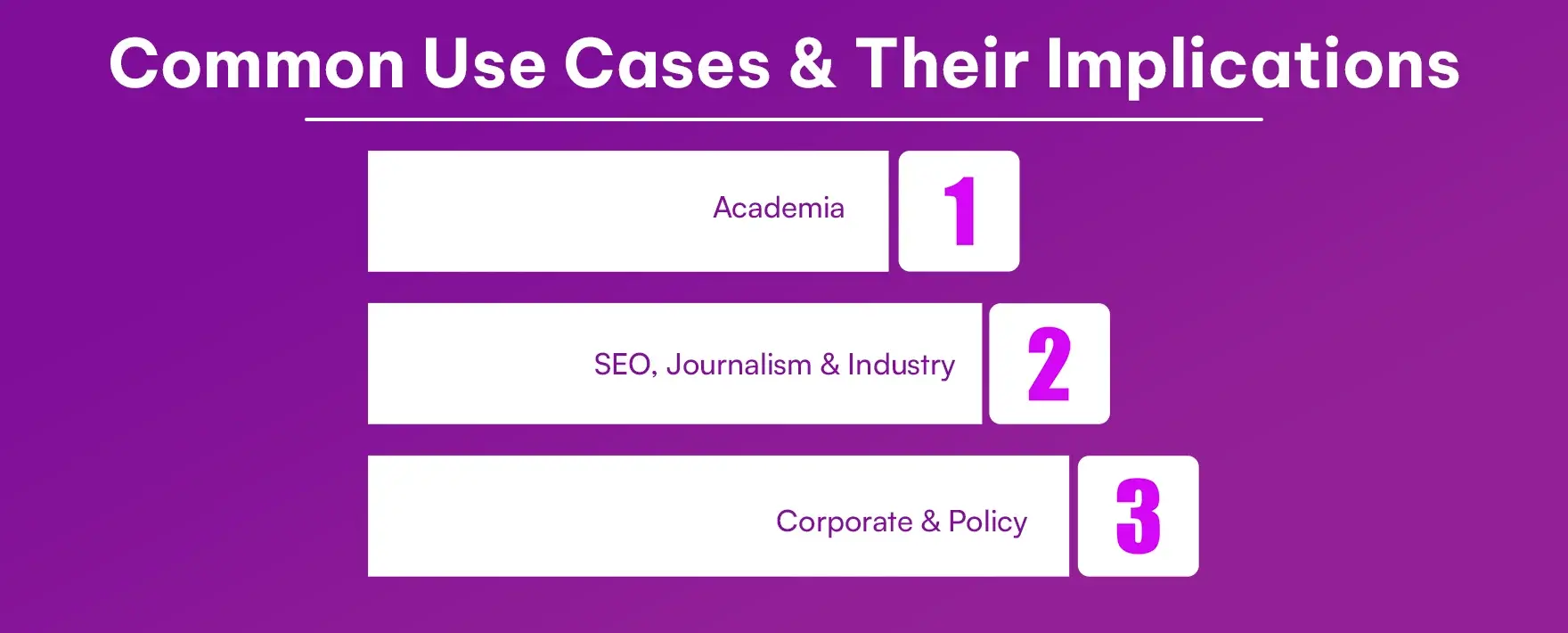

Academia

- Turnitin: It has offered AI detection since April 2023. Tools split submissions into segments to be scored with an overall percentage estimate

Detection scores are essentially starting points, not proof. Educators must, however, consider confronting students and not immediately chastising them

SEO, Journalism & Industry

Detectors can be used by publishers to test for originality, but publishers and editors should also use human judgment

Corporate & Policy

Mixing AI and human inputs is therefore possible in the work of businesses. It would be helpful for detection tools to document usage; however, they should not be the only means of evidence for compliance.

Best Practices for Using AI Detection Scores

The Best Practice for using AI Detection Scores is:

Using Scores as Guidelines

- Let scores be indicators, never set-in-stone judgments.

Follow Up Through Human Review

- Consider context, style changes, metadata, differing drafts, and source materials

Understand Your Tool's Limits

- Get to know false positives and false negatives in your tool. Set up ensemble methods or watermarking to improve the reliability

Don't Make It Biased

- Be mindful of language variation and fairness, particularly with education in mind.

Foster Adaptations to the Writing Process

- Keep burstiness and unpredictability at their peak as you write content, and preserve clarity.

What is a "Good" or "Acceptable" Score?

What to choose before selecting any AI Detector:

Simply put, with Bypass AI, anything lower than 20% is discarded, while anything beyond 20% poses some level of AI risk.

Bypassai.io: Offers levels of confidence- Lite model might consider a slight AI edit as a low-risk false positive, whereas Turbo will take it much more seriously.

Context Matters: Different use cases demand such scores to be considered acceptable. Academic work needs stricter parameters than marketing copy

Read More : Top 5 Methods to Detect AI-Written Papers

The Future of AI Detection

Here are some methods for the future of AI detection as follows:

Advanced methods: Watermarking during training, ensemble defences, and semantic retrieval are some methods that are emerging to aid detection.

An Ongoing Arms Race: The detection efficacy is ongoing against generative models and, therefore, new methods, such as DetectGPT, watermark-based classifiers, and AI-capable retrieval systems, are to be expected.

Regulatory use: Detecting AI may one day be used informally in publishing ethics, contract compliance, or policy enforcement, only when detection is accurate and fair.

How Accurate Are AI Detection Scores? A Closer Look at Metrics and Methodologies

Simply keeping an eye on the percentage value can never suffice to understand the reliability of AI detection scores. Sensitivity and specificity depend notably on the data sets used to train these systems and on model architectures.

For example, Bypassai IO tries to have a high precision (i.e., above 99%) and therefore trades off recall, meaning it tries to avoid false positives but at the cost of sometimes missing very subtly-edited AI-generated content. Open-source tools may lean toward higher recall and risk flagging works of genuine human authors, thus creating ethical concerns within the realm of educational and publishing sectors.

Academic research, including that of the University of Maryland, has shown that the average AI detection accuracy of several popular tools rarely goes above 80%, and slight changes in syntax or phrasing greatly alter the detection result. Such findings assert the cautious interpretation of outputs and thereby substantiate multi-level verification of the said outputs.

Integrating AI Detection in Institutional Workflows

For institutes, and especially for academic and corporate publishing, AI detection scores are not standalone judgments but rather have to be integrated into much larger frameworks of integrity.

Academic Institutions:

In universities now, there are multi-step protocols by which a high AI score is achieved:

Triggers a committee review process.

Mandates that the student submit drafts, research notes, or time-stamped logs.

May allow for guided interviews rather than immediate penalties, emphasizing ethical education rather than punishment.

Additionally, student contracts and honour codes are being revised to include explicit instructions on what AI technology is acceptable for use by the student, such as stipulating the use of Grammarly or language help while disallowing the actual generation of full sentences or paragraphs by the student.

AI Detection vs. Plagiarism Detection: Key Differences and Why It Matters

AI detection tools are mistakenly taken as plagiarism checkers, both of which have to do with verifying content for authenticity.

| Feature | AI Detection | Plagiarism Detection |

|---|---|---|

| Purpose | Identifies likely AI-generated content | Finds copied or non-original content from existing sources |

| Method | Analyzes sentence structure, perplexity, burstiness, and linguistic patterns | Compares input text against databases, journals, and websites |

| Output | Probability/score that the text is AI-generated | Percentage of matched content and source attribution |

| Tools | GPTZero, Originality.ai, Turnitin AI Module | Turnitin (original), Copyscape, Grammarly Plagiarism Checker |

Why this matters: Text generated by AI could be highly original- well, it could never be plagiarized! Yet it lacks that human insight or authenticity that we attribute to work created by a conscientious human author. Conversely, a plagiarized text could well be an example of a fully human work, but it is clearly unethical. Thus, educators and publishers require both these tools to judge work for originality and integrity.

Cross-consideration between plagiarism and AI detection is therefore fast becoming one of the highest standards in the big leagues, e.g., journal publishing, thesis evaluation, and branded content auditing.

Conclusion

An AI detection score is certainly not a judgment in a general comparison. Statistically, evidence suggests that there may be some sort of AI generation present within the content. Detection tools are language models, repetition checks, sentence-level analyses, and other AI techniques-working with a few imperfections. Scores should encourage conversation, not decree truth. In academic or editorial or compliance settings, the most robust options would allow AI detection, human oversight, fairness checks, drafts, metadata, and transparent policies.

FAQs

1. What is an AI detection score?

An AI detection score is a numerical or percentage-based result given by AI content detectors that indicates the likelihood of whether a piece of text was written by a human or generated by an AI model.

2. How is the AI detection score calculated?

The score is calculated using machine learning models that analyze writing patterns, syntax, sentence structure, word predictability, and other linguistic features commonly found in AI-generated content.

3. What does a high AI detection score mean?

A high score (e.g., 90–100%) usually means the detector believes the content is most likely AI-generated. However, this doesn’t always mean it is inaccurate — it's just a probability, not proof.

4. What score is considered safe or human-like?

Generally, a score below 20% is considered low and likely to be human-written. A 0% score means the content passed undetected as AI. However, the thresholds may vary between detection tools.

5. Can AI detection scores be wrong?

Yes, they are not foolproof. Many detectors can produce false positives (marking human content as AI) or false negatives (failing to detect AI content).